Apple got a new patent from the USPTO this week, detailing a technology that has to do with projections and in air gesturing. It seems like a PrimeSense-derived technology, able to interpret in air gestures and recognize them.

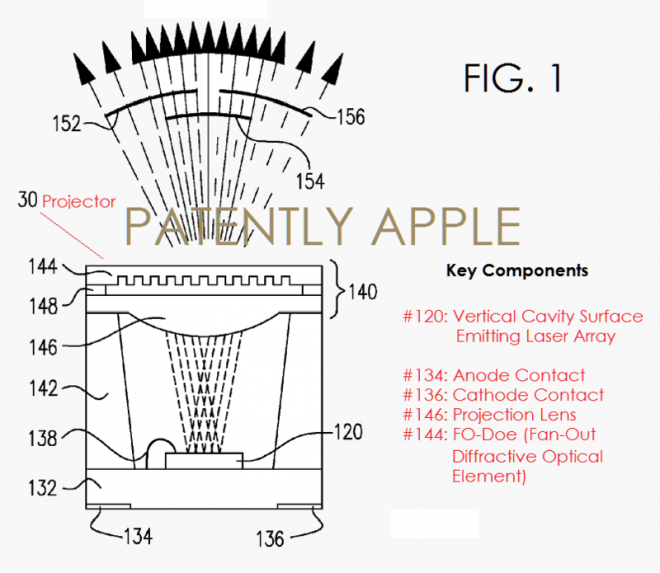

The documents mention an optoelectronic device, that includes a semiconductor substrate, an array of optical emitters arranged on that substrate in a 2D pattern and a projection lens, plus a diffractive optical element. The projection lens is placed on the semiconductor substrate and has the aim to collect and focus the light of the optical emitters in order to project optical beams with a certain pattern on the substrate.

The pattern of light goes to an object or scene and then there’s a processing system that analyzes the projected pattern and creates a 3D map of the object/scene. This 3D map of depth can be used to detect the area around and user gestures, plus it may help with architecture projects, much like Intel RealSense does or Project Tango.

iDevices are mentioned in tandem with this patent, but we’re a long way from iPhones with projectors, believe me…

via patentlyapple